Data Mining And Scraping Using A Proxy

Content

Data Mining With Proxy

For instance, some websites restrict customers from particular regions from accessing their websites. You can use a proxy to vary your location to an appropriate area and entry such websites.

Chillax Saturday: strawberry and mint fizzy bubble tea with Coconut CBD tincture from JustCBD @JustCbd https://t.co/s1tfvS5e9y#cbd #cbdoil #cbdlife #justcbd #hemp #bubbletea #tea #saturday #chillax #chillaxing #marijuana #cbdcommunity #cbdflowers #vape #vaping #ejuice pic.twitter.com/xGKdo7OsKd

— Creative Bear Tech (@CreativeBearTec) January 25, 2020

Data Mining Techniques

Neither information assortment, information preparation, nor the interpretation of outcomes and data are a part of the information mining stage, but they belong to the whole KDD course of as extra steps. Data mining is the process of discovering patterns in massive data sets involving methods on the intersection of machine studying, statistics, and database methods. It is a vital process where clever methods are applied to extract knowledge patterns.

Data Mining With Proxies

Grow your wholesale CBD sales with our Global Hemp and CBD Shop Database from Creative Bear Tech https://t.co/SQoxm6HHTU#cbd #hemp #cannabis #weed #vape #vaping #cbdoil #cbdgummies #seo #b2b pic.twitter.com/PQqvFEQmuQ

— Creative Bear Tech (@CreativeBearTec) October 21, 2019

If you are supplied with rotating proxies, also called dynamic proxies, they hold altering at common intervals. Some are set to alter every time you log in afresh while others change per day. The backconnect node gives access to the entire proxy pool, and also you don’t want any proxy list or a number of authentication strategies, and so forth.

Hiding Your Ip Address

USA Marijuana Dispensaries B2B Business Data List with Cannabis Dispensary Emailshttps://t.co/YUC0BtTaPi pic.twitter.com/clG0BmdFzd

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Based on the data, you'll be able to remodel your business’s picture and model to go well with the market expectations and avoid damages. This practice goals to learn how businesses you might be competing with are pricing their services or products. The scraping bots go on to the rivals’ database and extract their pricing knowledge.

Vitamins and Supplements Manufacturer, Wholesaler and Retailer B2B Marketing Datahttps://t.co/gfsBZQIQbX

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

This B2B database contains business contact details of practically all vitamins and food supplements manufacturers, wholesalers and retailers in the world. pic.twitter.com/FB3af8n0jy

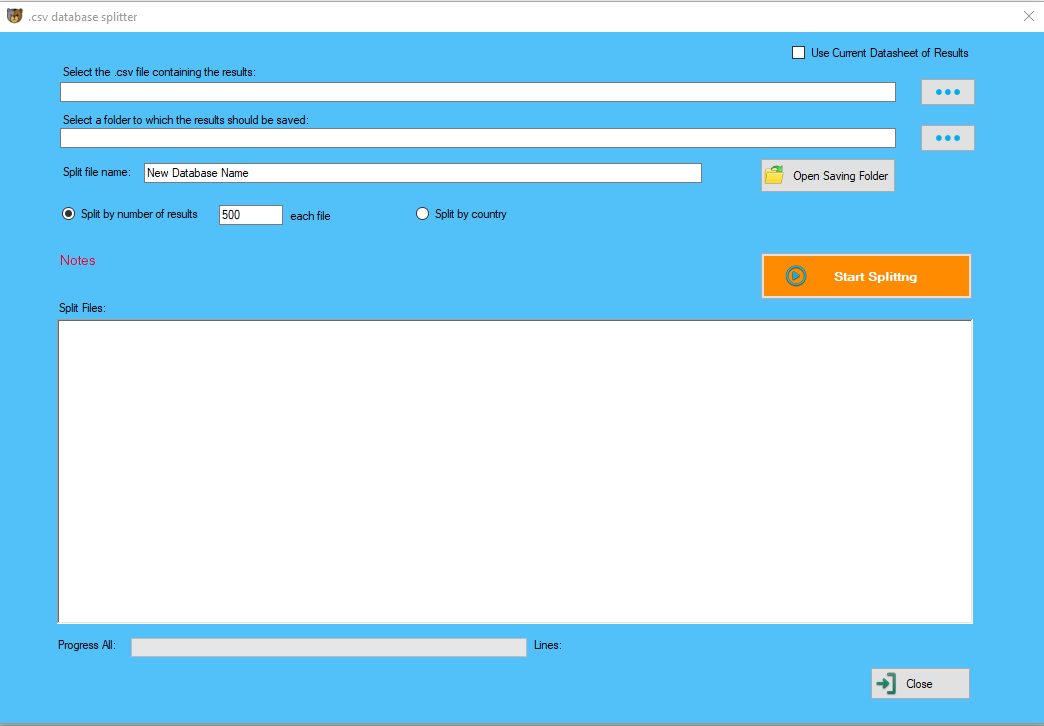

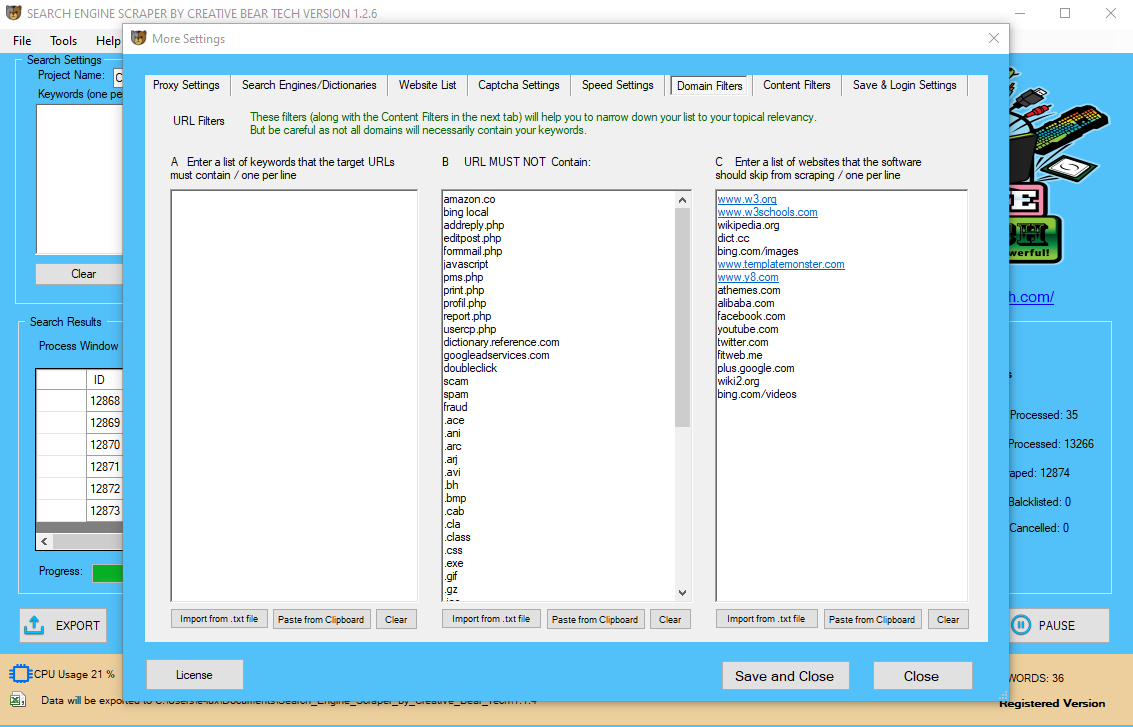

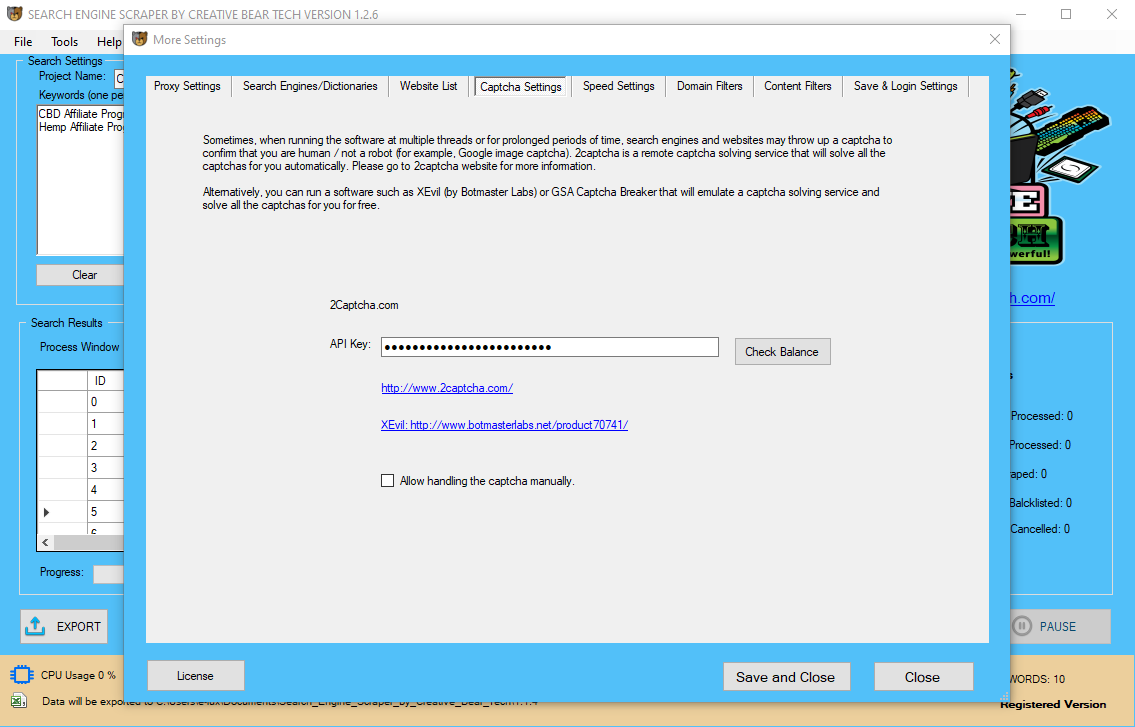

I someone to create a rotating proxy API that provides me with clear and not over-used french proxies. Through web scraping, you'll be able to establish the information and on-line conversations that would have an effect on your brand. Call us or e mail us at present to learn the way our personal proxies might help you facilitate efficient information mining. The anonymity also permits you to entry websites that you could be not normally access. Scraping softwares automate this kind of operation, gathering the info in only a fraction of the time it take a human to execute the same directions. You want to seek out it, entry it (right here you’ll need a proxy), sampling it and if essential transforming it. The orthodox use of knowledge warehousing concerned the storage of knowledge for dash-boarding skills and reporting. But now, they're a vital a part of the information mining course of as developments have occurred which have made it potential to use the method for data mining. Some semi-structured and cloud knowledge warehouses provide an in-depth evaluation of the info. The harvested information is then stored in a local storage system or database. A complete evaluation of the info will provide Free Email Extractor Software Download you with insight into the specific market dynamics that you just want to study. This article is a small part of our “Ultimate Lead Generation Software Guide to Data-Mining Scraping with Proxies“. Picture your self nearing the tip of your course of when your connection abruptly breaks and all your work is wasted. Now we come to the true aim of this article, which is to introduce you to how you can get better and more profitable at information mining if you use a proxy server. The only approach to access this info for personal use is to copy and paste the info manually. This is a very tedious job especially when it comes to large amounts of information.

Kick Start your B2B sales with the World's most comprehensive and accurate Sports Nutrition Industry B2B Marketing List.https://t.co/NqCAPQqF2i

— Creative Bear Tech (@CreativeBearTec) June 16, 2020

Contact all sports nutrition brands, wholesalers and manufacturers from all over the world in a click of a button. pic.twitter.com/sAKK9UmvPc

- Since the IP addresses aren't assigned to a specific physical location, it's easier for web sites to establish and block data middle proxies in comparison with residential proxies.

- They are, subsequently, much less dependable, especially when mining information on secured websites.

- Data middle proxies are IP addresses of servers which are hosted in knowledge facilities servers.

- Web scraping is more and more becoming a valuable technique for accumulating a large amount of useful information.

- The most secure way to mask your real IP handle is by use of a proxy.

IP cloaking is a more delicate and a lot more damaging method some websites deal with screen scraping. For occasion, Amazon would possibly simply show a bunch of faulty prices for products you are scraping to make your pricing information scrape ineffective. Our shared US proxies have tons of of subnetworks, so you will not be blocked or cloaked easily. It is estimated that fifty eight.1% of net visitors is non-human, and 22.9% are bots employed by companies to collect info. Data-driven businesses cannot afford to disregard proxies and their function in accumulating helpful data efficiently. Now that we've a clear understanding of proxies and their function in net scraping, let us look at methods in which completely different businesses use proxies in net scraping. As talked about earlier, internet scraping is the process of extracting massive amounts of useful knowledge from a web site using an utility or internet scraping software. Some proxies also render your content in an iframe or use interstitial ads on each third or so request, in order to make some money. Depending on the automatic system you’re utilizing to reap data, this could add junk fields or break your script. As we have proven, there are no viable options to utilizing proxies when gathering on-line information at scale. All of those methods have severe limitations and must be prevented if you are critical about successfully amassing giant quantities of accurate information. They may help you go undetected whereas internet scraping and you'll automate the process via a command-line interface, crawling more pages without delay since web sites don’t have to be rendered. Businesses use this knowledge collection methodology for competitive intelligence and market evaluation. Data from web sites and social media could possibly be collected for demand analysis and sentiment evaluation. So, when it comes to extracting intelligence from such websites, findings show that Geosurf, Luminati, and Smartproxy ought to be among the top picks for this explicit audience. What you do, in this case, is ready up a script that rotates through quite a lot of proxies in an inventory. You make request A from proxy A, then request B from proxy B, and so on down the listing. Depending on the quantity of database it might take a while to preprocess all that info. For example, the info mining step may identify several groups in the knowledge, which may then be used to obtain more accurate prediction outcomes by a decision assist system. In the last stage of the process, a detailed plan for monitoring, delivery, and upkeep is developed and shared with business operations and organizations. Data is processed by including in any missing pieces of knowledge or values, or by canceling out the noisy knowledge. The enterprise understanding the preliminary step for data mining entails enterprise understanding. Business understanding means to know the wants of the shopper and outline your objectives accordingly. With a reliable backconnect proxy server, it is possible for you to to gather data by way of a stable connection, more accurately and extra shortly, and all this while staying secure and protected. This easily may be averted by limiting the variety of redirects allowed on your data scraping framework. For example, if you set the limit to 5 than the infinite loop will cease after visiting 5 URLs. As web sites continue to enhance, it has turn out to be more and more difficult for knowledge scraping instruments to extract and store data precisely. This can occur because your personal server provides unreliable connection. You merely should have an excellent connection for all steps of data mining, regardless of the technique you might be using. At the present, there are plenty of ‘mining’ processes that people talk about. For example, cryptocurrency mining is very in style, so you might suppose that data mining is just like it. Data mining is a superb software for locating and evaluating the proper knowledge for your small business requirements. There are many strategies used for this objective, owing to the feasibility of the group or the staff. These techniques make use of AI, machine learning, and database management to supply the most effective outcomes. The knowledge is extracted in an automated manner and saved in an area file or database on your laptop. So, how can businesses access and extract such data extra effectively? Websites do not provide the performance of copy and paste of information displayed in their sites. At some point, say request L, you loop again to proxy A as a result of request A has been carried out for some time. There are additionally all the ordinary points with utilizing a proxy server, which is almost all the time positioned abroad. This adds latency to the requests, making your operations take longer. Proxies are notoriously fickle and vulnerable to dropping requests, all of which trigger points. In order to handle figures and numbers in your computer, you can use spreadsheets and databases. Can you think about how much time you'd waste if you had to manually copy and paste each piece of knowledge you want from a website?  The solely downside is that these browsers use lots of RAM, CPU, and bandwidth, so this option solely suits those with a strong set-up. When you need to acquire small quantities of data, where IP blocking is unlikely to be a difficulty, proxies can be slower to use and incur further costs.

The solely downside is that these browsers use lots of RAM, CPU, and bandwidth, so this option solely suits those with a strong set-up. When you need to acquire small quantities of data, where IP blocking is unlikely to be a difficulty, proxies can be slower to use and incur further costs.  As a end result, you may get irrelevant knowledge, which, if used, can lead to mistaken business decisions and losses. The number of corporations utilizing net scraping has increased dramatically over the last couple of years. There are dozens of ways our purchasers use our proxy network for internet scraping. Even although every scrape attempt and goal is unique, each considered one of them is dominated by an underlying need to stay fast, anonymous, and undetected. Now that you’re ready and you know what obstacles to anticipate, it’s time to discuss one of the best tools for knowledge mining. Some web sites deploy infinite loops as a way of security to be able to mislead an information scraping bot when it hits a honeypot. Therefore, your finest wager is to get that backconnect proxy as soon as you possibly can. It will offer you all essential conditions for smooth and profitable knowledge mining. Even when you just google one thing, you might be in peril of varied malicious cyber activities. This danger tremendously will increase if you use your computer for information mining or related superior processes. There are many tracking and surveillance bots on-line that may use your site visitors and access your browser for malicious reasons. More persons are, therefore, more dedicated to making themselves invisible to those and different digital systems. So, how do you cover your scraping exercise, avoid your software being blocked or fed with fake data? First, you need to understand how web scraping detection techniques work. If the website proprietor understands that this explicit visitor just isn't an actual human, but a bot, nothing stops him from blocking it or even mislead the competitor by displaying faux data to a robotic. Another downside with your existing server is that it might be blocked by some websites because of its location. In addition, for the same cause (the time-consuming course of), you'll be able to easily get banned by your goal web site server. After some time and plenty of operations, any server will begin getting suspicious toward your activities.

As a end result, you may get irrelevant knowledge, which, if used, can lead to mistaken business decisions and losses. The number of corporations utilizing net scraping has increased dramatically over the last couple of years. There are dozens of ways our purchasers use our proxy network for internet scraping. Even although every scrape attempt and goal is unique, each considered one of them is dominated by an underlying need to stay fast, anonymous, and undetected. Now that you’re ready and you know what obstacles to anticipate, it’s time to discuss one of the best tools for knowledge mining. Some web sites deploy infinite loops as a way of security to be able to mislead an information scraping bot when it hits a honeypot. Therefore, your finest wager is to get that backconnect proxy as soon as you possibly can. It will offer you all essential conditions for smooth and profitable knowledge mining. Even when you just google one thing, you might be in peril of varied malicious cyber activities. This danger tremendously will increase if you use your computer for information mining or related superior processes. There are many tracking and surveillance bots on-line that may use your site visitors and access your browser for malicious reasons. More persons are, therefore, more dedicated to making themselves invisible to those and different digital systems. So, how do you cover your scraping exercise, avoid your software being blocked or fed with fake data? First, you need to understand how web scraping detection techniques work. If the website proprietor understands that this explicit visitor just isn't an actual human, but a bot, nothing stops him from blocking it or even mislead the competitor by displaying faux data to a robotic. Another downside with your existing server is that it might be blocked by some websites because of its location. In addition, for the same cause (the time-consuming course of), you'll be able to easily get banned by your goal web site server. After some time and plenty of operations, any server will begin getting suspicious toward your activities.